As AI-generated content becomes increasingly common, it’s essential to consider how we verify the authenticity of what we read, watch, and engage with online. From schools to social media, AI-driven text and multimedia are pervasive. This ubiquity raises the question: how can we be sure that the content we're consuming was created by a human rather than an AI model? Today, we’re at a point where AI-generated content is nearly indistinguishable from human-created material, making it challenging to discern authenticity.

Several tools have emerged to help detect AI-generated content. For example, platforms like GPTZero and Grammarly have released AI detectors aimed at identifying whether a piece of content is generated by artificial intelligence. However, as we’ll discuss, while these tools can provide some insights, they have limitations and are not always reliable in high-stakes scenarios. Detecting AI-generated content is a growing challenge that demands more robust solutions as AI models continue to advance.

Why AI-Based Detectors for Content Won’t Work

AI content detectors can help to some extent, but they face inherent challenges that limit their effectiveness. For example, with each new generation of language models—such as the recent release of GPT-4o1–AI becomes better at producing content that is difficult to distinguish from human-created material. As AI models continue to evolve rapidly, detectors must continually adapt to keep up, which leads to a kind of technological arms race.

Many existing AI detectors are unable to provide the level of reliability needed for critical applications, such as academic integrity or legal investigations. These tools can sometimes falsely identify human-written text as AI-generated and vice versa. In high-stakes scenarios, these errors can have significant consequences, potentially causing wrongful accusations or missed detection of AI content. Because of this, businesses and individuals cannot depend solely on AI detectors, especially for important decisions where accuracy is of importance.

This evolving landscape underscores the need for a more reliable, scalable approach to content verification—one that can keep up with advancements in AI models and provide trustworthy insights without the limitations of traditional detection tools.

Cryptographic Watermarking as the Future

The next frontier for authenticating content may lie in cryptographic watermarking. As AI becomes more advanced, the need for an unchangeable verification system grows. A potential solution involves cryptographically signing AI-generated content, creating a digital "watermark" that remains invisible to the human eye but is verifiable through cryptographic means.

Here’s how it could work: when a model like OpenAI’s GPT generates text or when a visual model produces an image, it would use a private cryptographic key unique to the AI provider to “sign” the content. This signature would be embedded in the content itself but wouldn’t affect its appearance, meaning readers wouldn’t notice any visible watermark or alteration. Then, anyone could verify the content’s origin using a corresponding public key. This method would provide a secure way to ensure that the content has not been tampered with and genuinely originates from a specific AI model.

This type of cryptographic watermarking could serve as a more sustainable solution to content verification in the age of advanced AI. Rather than relying on external detectors that become outdated as AI evolves, cryptographic signing ensures content can be trusted based on the original creator’s signature. This system could extend beyond text and apply to videos, images, and even audio files, creating a universal solution for all types of media.

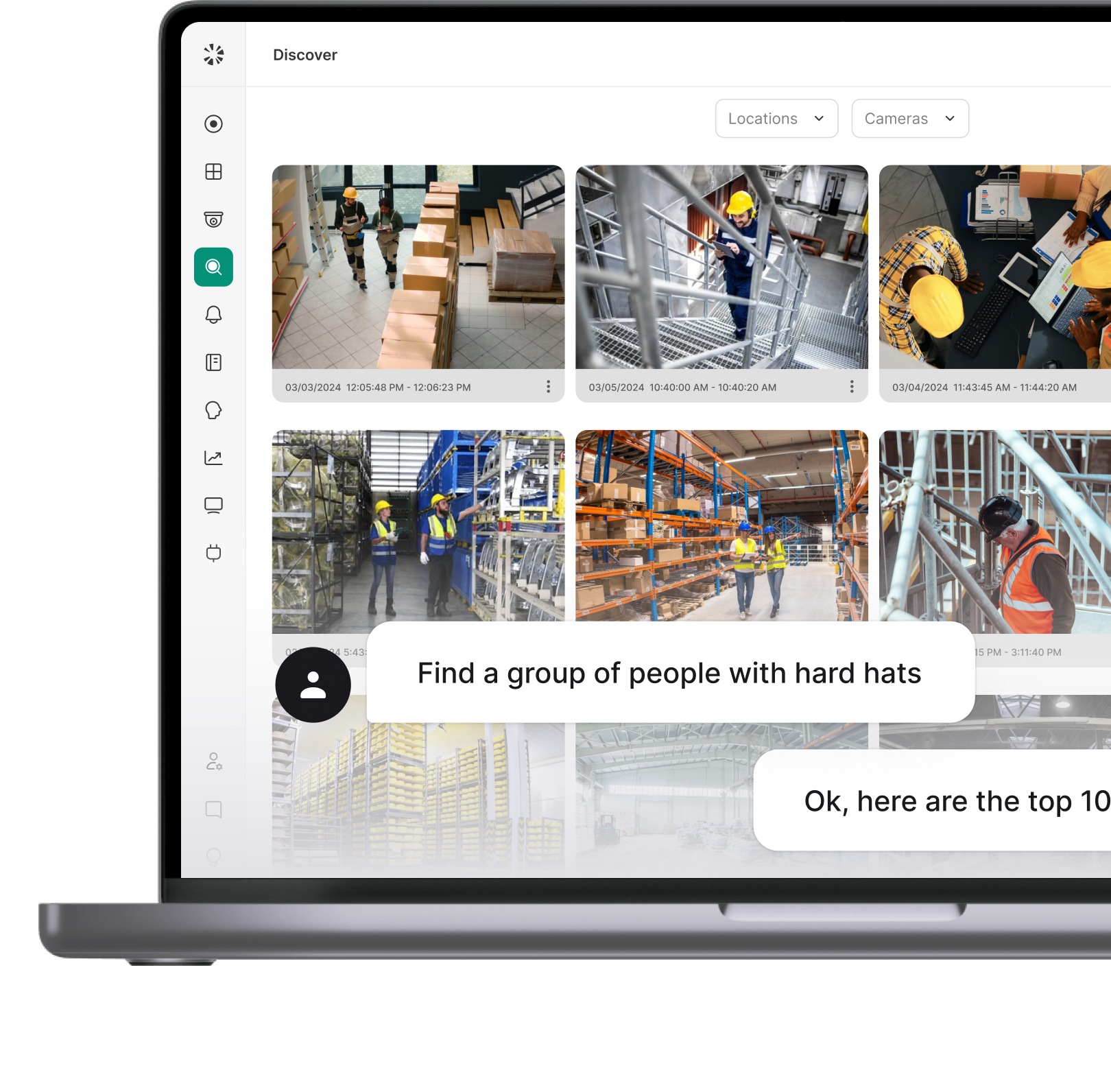

Coram’s Plan to Cryptographically Mark Video for Authenticity

As a leader in video security technology, we understand the importance of authenticating content, especially for high-stakes uses such as security investigations. Coram's video platform is frequently used by police and other authorities in critical investigations, where verifying the authenticity of video footage is essential. With this in mind, Coram plans to integrate cryptographic watermarking into its video content, allowing users to validate that videos originated from Coram’s platform without visible markings or disruptions to the footage.

In practice, this would mean that every video downloaded from Coram’s platform would carry an invisible, cryptographic watermark. This watermark would confirm the video’s authenticity without altering its content or visual quality. Users could then verify the origin of any Coram-generated video using Coram’s public key, which serves as a stamp of authenticity. This innovation will add an extra layer of security, helping ensure that any video content from Coram is reliable, unaltered, and legitimate.

By embedding cryptographic watermarks in its videos, Coram will be pioneering a new standard for digital content verification in the security industry. The approach offers a pathway forward for other AI content creators, too, as they look for ways to authenticate their own content.

The Urgent Need for Confidently Verifying Content Authenticity

To counter the spread of AI-generated fake content, we must prioritize effective ways to verify digital authenticity. Traditional AI detectors are falling behind, as new AI models produce content that’s nearly indistinguishable from human work. Cryptographic watermarking offers a promising solution, providing a verifiable digital signature without visible changes to the content. Coram’s adoption of this technology in video security sets a critical standard, but broad implementation across media types will require industry-wide commitment. For a trustworthy digital landscape, investing in reliable verification methods is essential.

.jpeg)

.png)

.webp)